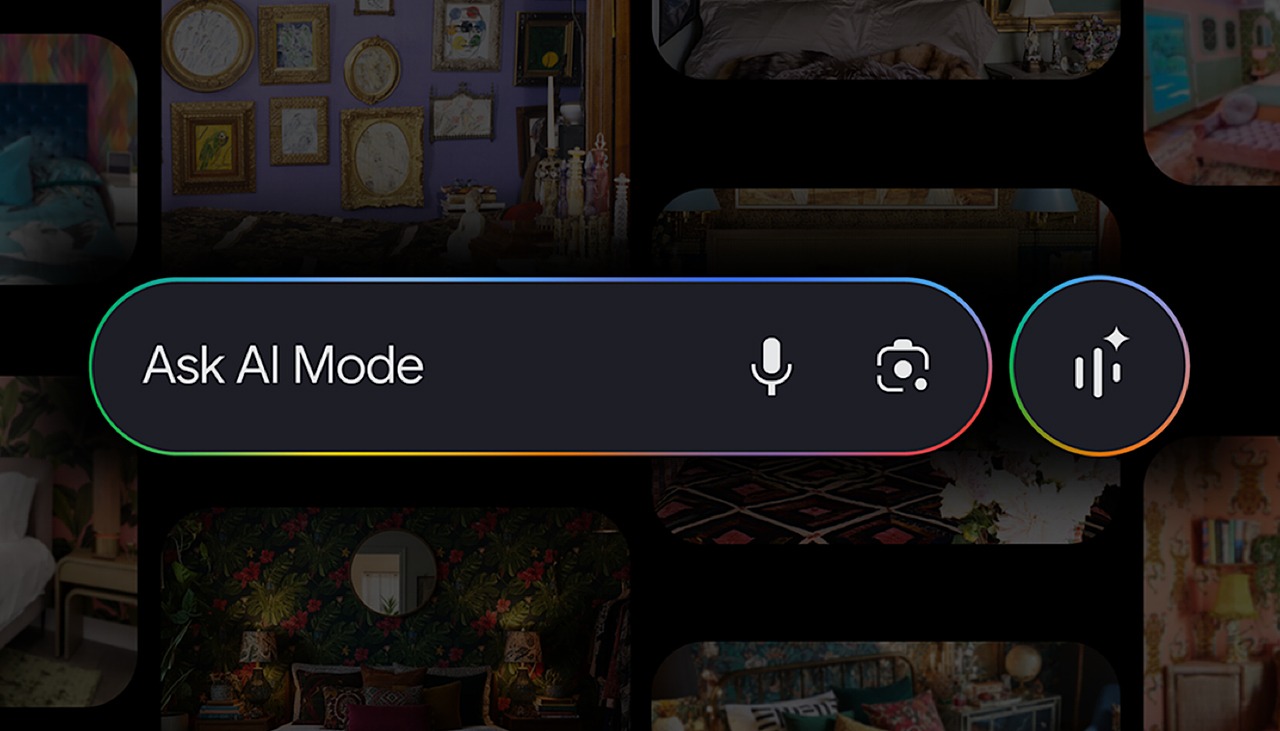

Google AI Mode Gets Visual + Conversational Image Search

What’s New: Visual + Conversational in Google AI Mode

Some highlights:

- You can start with a picture (for example, a room design you like) or a phrase, and then refine the search with follow-up questions.

- Each image result is linked back to its source, so that users can click through for more details.

- In shopping scenarios, you no longer need rigid filters like “brand / size / color.” Instead of this, you can say something like “show me barrel jeans that aren’t too baggy,” and then further narrow it (for example: “ankle length”).

- The feature is powered by Google’s advances in multimodal AI (language + image understanding) and techniques such as “visual search fan-out.”

Why This Change Matters

This update is more than a neat trick. It signals a deeper shift in how people will search and how content will be discovered. Here’s why it’s important:

Search becomes more intuitive. Many times, we see something (a style, an object) but can’t put it into precise words. Now you can show + tell, and Google will try to make sense of it.

Better alignment between images and intent. Google will try to understand visual cues and match them with user intent in a conversational context.

Higher stakes for quality visuals. If your images are clear, well-tagged and in context, then they have a better chance of being surfaced.

E-commerce gets smarter. Shoppers may not think in terms of exact filters; they might describe what they want. So, if your product feed is well-maintained, then you may benefit from it.

SEO is evolving. As search becomes more visual and conversational, relying completely on text-based ranking signals won’t be enough.

How It Works

- Google blends multimodal AI (image + text understanding) via its Gemini model and integrates it with existing capabilities like Lens and Image Search.

- The visual search fan-out method breaks down the image/context into subqueries, exploring various angles (foreground, background, parts) to grasp nuance.

- On mobile, you can zoom into parts of an image (e.g. a lamp in a room) and ask questions specific to that section.

- Personalization and prior user behavior may help Google interpret vague modifiers like “not too baggy” or “subtle print.”

- The system doesn’t necessarily distinguish between “real photos” or AI-generated images; signals like authoritativeness, source and context help ranking.

Benefits & Opportunities

- Stronger image strategies:

- Richer metadata matters:

- Conversational content pays off:

- More organic traffic potential:

- Testing & early advantage:

Challenges & Things to Watch

- The feature is limited to U.S. English at present. Many global markets haven’t seen it yet.

- Interpretation is imperfect. What you mean by “not too baggy” may not match Google’s.

- If your images are bland, low-quality or lack context, they may be ignored or penalized.

- Privacy, algorithmic biases and over-personalization may affect fairness and user trust.

- Edge cases (complex visuals, abstract ideas) may still be poorly handled.

How to Prepare & Adapt

- 1. Audit your image library

If your region has access, try searches starting with images + text on your own content and measure.

- 2. Improve metadata

- Use descriptive alt text, captions, structured schema.

- Mention style, material, usage, environment.

- 3. Write conversational product descriptions

- Use phrases people might say (not just keywords).

- Anticipate follow-up questions (“Show me more like this but …”).

- 4. Optimize your catalog and feeds

- Ensure variant attributes (color, size, prints) are clean and up to date.

- Use schema, product markup, structured data.

- 5. Test mixed-mode queries where possible

If your region has access, try searches starting with images + text on your own content and measure.

- 6. Monitor new search reports & image insights

Keep track of traffic coming via visual search paths.

Future Trends & Wider Context

- Search will become conversational-first and visual-first.

- Multimodal AI is the norm: Systems will handle text, images, perhaps even audio/video seamlessly.

- Discovery and shopping blur: What you browse and what you search may merge.

- Privacy, algorithmic biases and over-personalization may affect fairness and user trust.

- Edge cases (complex visuals, abstract ideas) may still be poorly handled.

Future Trends & Wider Context

- Search will become conversational-first and visual-first.

- Multimodal AI is the norm:

- Discovery and shopping blur:

- Larger AI ecosystems:

- Academic & technical advances :